Recent posts

Dynamic Capacity Allocation

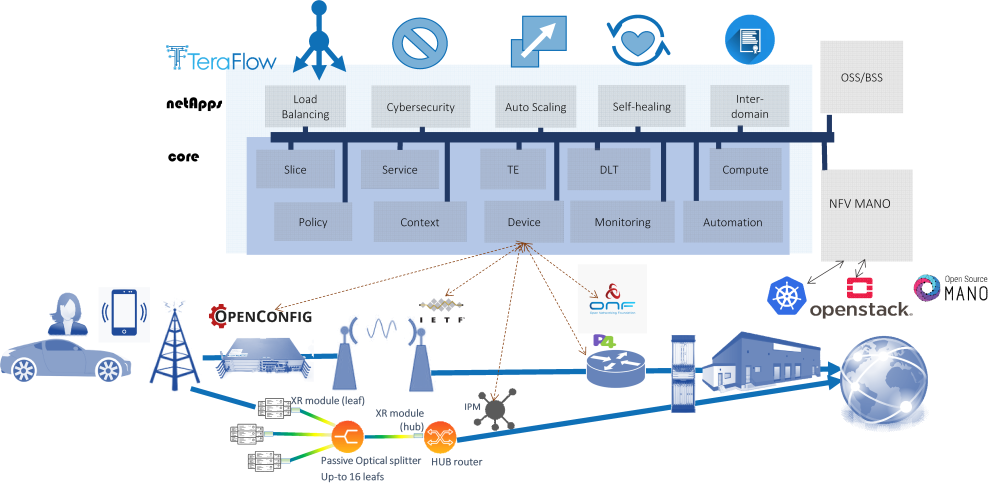

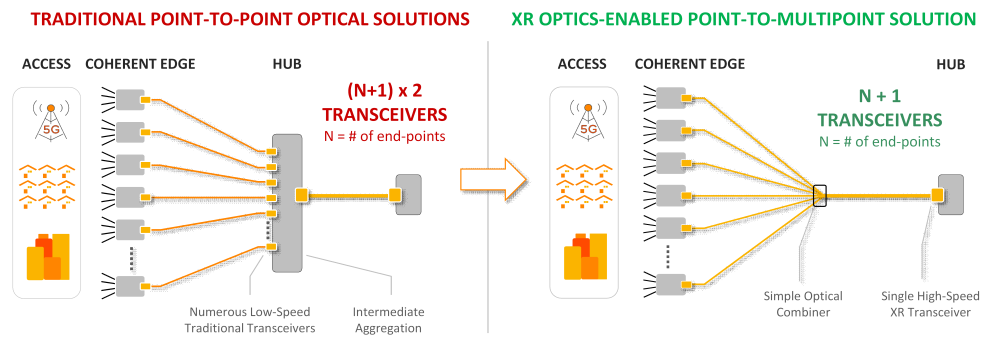

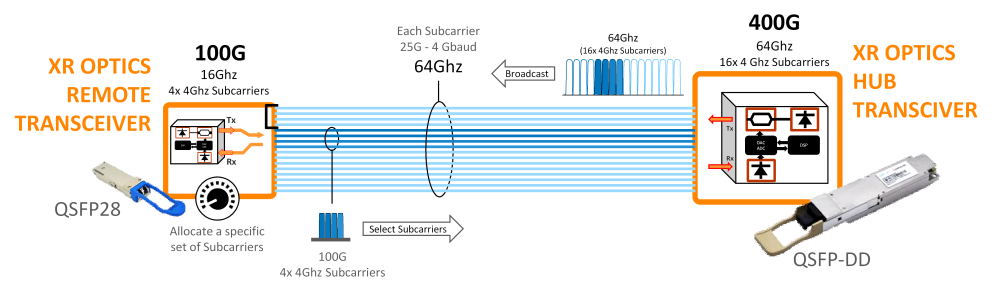

XR optics enables a consistent network architecture based on a common “currency” of 25 Gb/s digital subcarriers between hub and spoke (leaf) locations. A simple and cost-effective splitter/combiner is used to broadcast digital sub-carriers to leaf locations. The 400G capacity at the hub occupies 64GHz of spectrum and is divided by 16 individual 4GHz digital sub carriers (Figure 2) to carry 400G payload.

Each leaf module is synchronized to hub signal and configured to receive its assigned subcarriers. For upstream traffic, each leaf site transmits selected sub-carriers back to the hub.

Utilizing 25 Gb/s subcarriers enables more dynamic and rapid allocation of capacity in the network without complex planning and time-consuming truck rolls. Capacity can be allocated easily across the network permanently or for a certain period of time, and this allocation can be manually performed or triggered by software automation. XR optics breaks the limitations and restrictions where a service interface is bonded to a specific or a predefined traffic pattern by enabling dynamic and software-based allocation of the capacity quickly and throughout the network

XR constellations and dual management solution

The optical networking industry has endorsed open network where transponders from different vendors can be inter-connected over a 3rd party open line system. The industry has developed open frameworks and software tools to make sure information is shared between the different layers and vendors to enable alarm correlation, auto-discovery, power control loops and many other functions. This has led to the creation of management guideline and industry standards.

The increasing adoption of IP over DWDM and the relentless demand for capacity are fueling the deployment of coherent pluggables in host devices other than optical transport platforms. Such host devices include routers, switches and eventually radio units. However, managing coherent pluggable in such host devices require a pairing between the transport domain on one hand, and IP domain controllers (when hosted in routers) or Application controllers (when hosted in servers) on the other hand. While such pairing is feasible, it turns out to be complex to implement and to operate. Moreover, as coherent pluggables become more advanced with new functions and capabilities, the IP domain and Application domain controllers will fall short of universally supporting the level of sophistication necessary to effectively control advance coherent optical engines. And despite the possible pairing between the optical, IP and Application domains, such mode of operation results in complex signaling and management methodologies and will still not provide the optimal level of control.

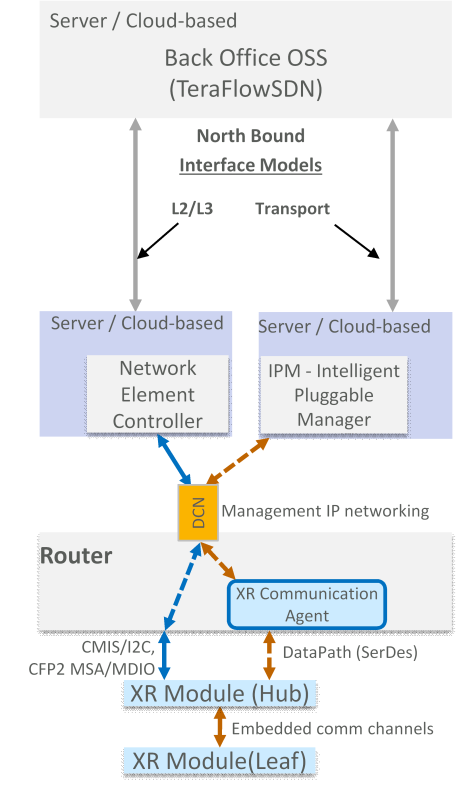

A solution to this challenge is to provide a dual management of the coherent pluggables irrespective of where they are deployed. The dual management architecture was released by the Open XR Forum and designed to accelerate development timeline, ensure operational and management consistency with established practices in the optical domain and enable network operators to benefit from new functions/applications introduced in coherent pluggables.

Furthermore, TeraFlow’s control and management architecture, which is aligned with that of the Telecom Infra Project OOPT MUST industry initiative, assumes a separation between the control of the IP domain and of the optical domain. The dual management approach described above integrates seamlessly in this blueprint.

XR optics hub-leaf constellations are dual managed as presented in Figure 3. Router management/router configures XR pluggable operation mode and parameters based on standard CMIS (QSFP-DD) / MSA (CFP2) specification. More advanced point-to-multipoint service configurations are managed with Infinera Intelligent Pluggable Manager (IPM). IPM controls XR modules via router inband datapath Serializer/Deserializer (SerDes) lines using IPv4/IPv6 connectivity. Dual management allows more rapidly to introduce point-to-multipoint solution to the networks, as full optical plane features are managed by IPM, and host router sees point-to-multipoint optical dataplane connectivity as individual VLAN presenting Digital subcarriers Nx25G service.

XR module IP management happens through datapath/SerDes, using specific management VLAN or alternatively OUI-extended ethernet type frame (RFC 7042). XR module sends/receives IPv4/IPv6 frames using host dataplane services or through via XR communication Agent. Infinera provides XR Communication Agent software component as source code via Open XR Forum for 3rd party host integration. Using OUI-extended ethernet type frame is preferred, as it doesn’t require VLAN allocation from pluggable port.

Communication Agent Service provides Network-Address-Port-Translation (NAPT) functionality, enabling sharing router host management IP address and existing management connectivity for XR module management. XR Communication agent with router NOS vendor integration and porting work will interfaces directly to XR module without the need to configure specific IP data plane services in router to support end-to-end communication between XR module and IPM. Without Communication Agent, XR modules IP address management are realized using DHCPv4/DHCPv6 servers with network operator IP address/subnet assignments for XR modules and related router service configurations for data-plane connections/services like any other generic managed IP device having dynamic IP address in network.

XR modules connect to IPM using calling-home procedure, where IPM IP address is learned either from XR Communication Agent or from DHCP server, simplifying XR modules installation on network.

XR constellation driver inside TeraFlowSDN

Infinera develops new device drivers (XR constellation driver) for TeraFlowSDN, which interfaces IPM northbound REST API and integrates XR point-to-multipoint technology to be managed via TeraFlowSDN workflows, presenting TeraFlowSDN as example of operator BackOffice OSS solution.

Component exposes point-to-multipoint connectivity services as {device/port[.VLAN]} for service layer, allowing service layer to configure usable bandwidth for port and directing IPM to reconfigure Digital subcarrier bandwidth accordingly.

This enables future development outside XR constellation driver deploying data plane service configuration to HUB and LEAF router hosts. Data plane service could be another new device driver connecting to the host NOS router management system or even using the available OpenConfig device driver for host VLAN service creation for Digital subcarrier, configuration and parametrization including VLAN shaping, IP link address provisioning for new HUB-LEAF connectivity. There are multiple different options, depending on XR host router capabilities and its existing management solution.